WordSense 2022

Python, OpenAI Whisper, GPT-3, Hardware Design

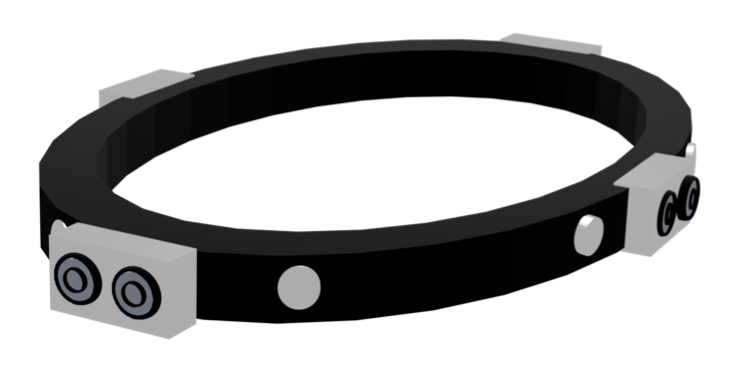

WordSense is a hardware product (a belt) that assists people with hearing disabilities in navigating daily life through Haptic Touch feedback.

Link to GitHub Repository︎︎︎

❝ Blindness separates us from things, but deafness separates us from people. ❞ - Helen Keller

THE PROBLEM

People with hearing disabilities do not have the same autonomy as others. They are not able to interact to the extent of those around them, and have limited freedom.

- People with hearing disabilities have to rely on sight to engage. When in conversation, they have to be facing the person to decipher lip movement or interpret sign language, and cannot multitask.

- People with hearing disabilities can only interpret direct conversations– they do not have the autonomy to passively understand conversations around them.

- Although they may have sight as a sense, they have more tunnel vision than others because sounds can be indicators as to where to look and what to expect.

How might we use OpenAI’s Whisper and GPT-3 to unite people?

introducing...

a hardware product that assists people with hearing disabilities in navigating daily life with tactile sensory feedback

SOLUTION

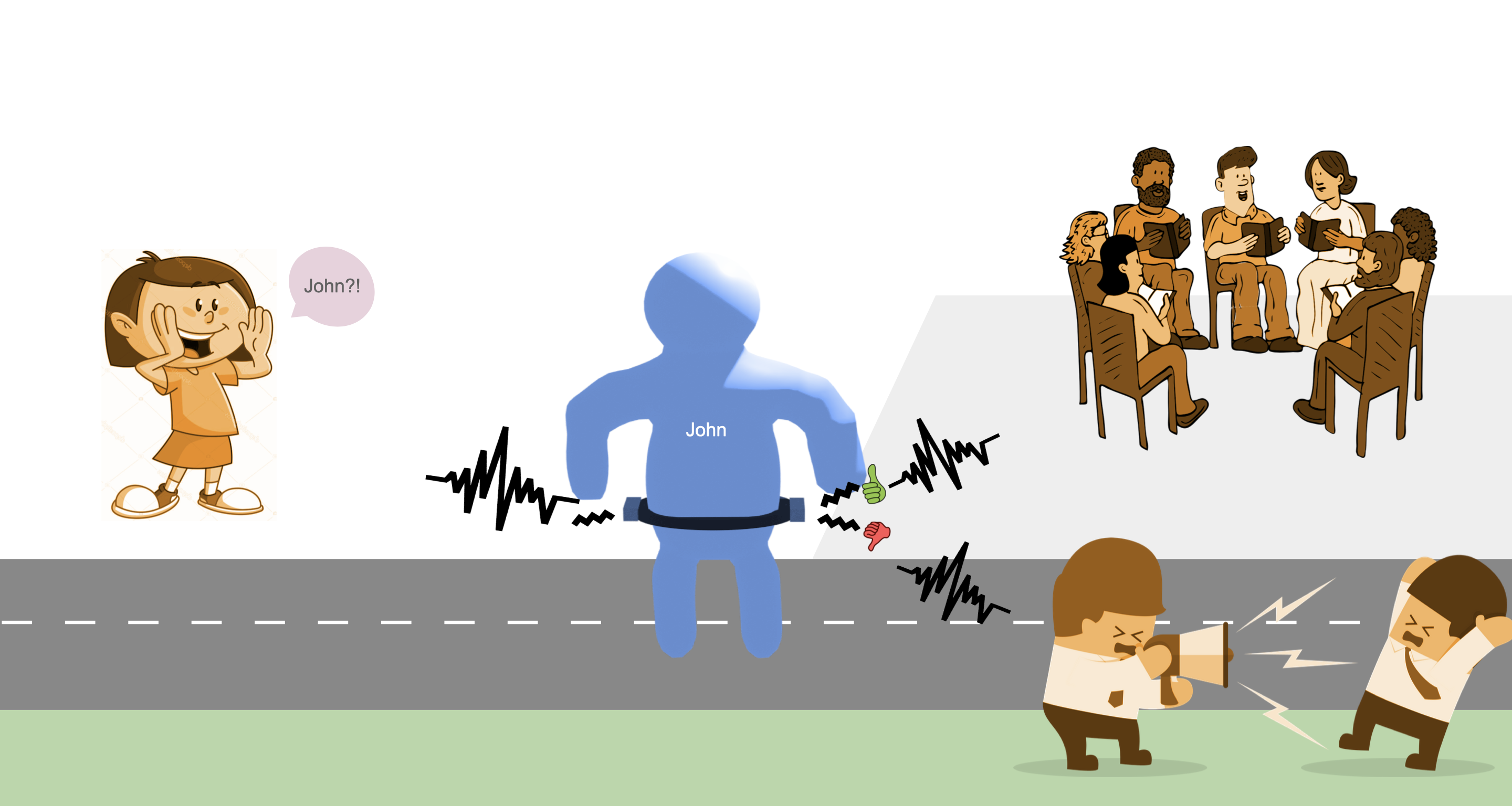

- People with hearing disabilities no longer have to rely solely on sight. They can feel the voices around them as physical sensations.

- Their tunnel vision is now expanded due to haptic feedback as a substitute for sound indicators. This helps direct their gaze and know what to expect.

- People with hearing impairments now have a sense of the kinds of conversations occurring around them due to the sentiment analysis feature. This helps them further investigate and potentially avoid dangerous situations, for example someone having an angry and potentially violent conversation around them.

- People with hearing disabilities can know when their name is being mentioned or called without having to be near them or facing them, with the user’s name detection feature.

HAPTIC TECHNOLOGY

Haptic technology is able to simulate the sense of touch and motion. This is useful to our users because:

︎︎︎ Haptic technology overcomes tunnel vision that our users experience when relying on sight by sending tactile signals; touch does not have a limited field of view like sight.

︎︎︎ Haptic technology allows for a wide range of forms that signals can take: simple vibrations, vibrations in the form of letters, morse code vibrations, etc.

Touch doesn’t have a limited field of view, allowing for a larger scope.

Imagine being able to feel sound waves as vibrations on your skin.

Imagine being able to connect with the world around you through physical sensations.

Imagine being able to connect with the world around you through physical sensations.

USER INTERACTION

case study 1

![]()

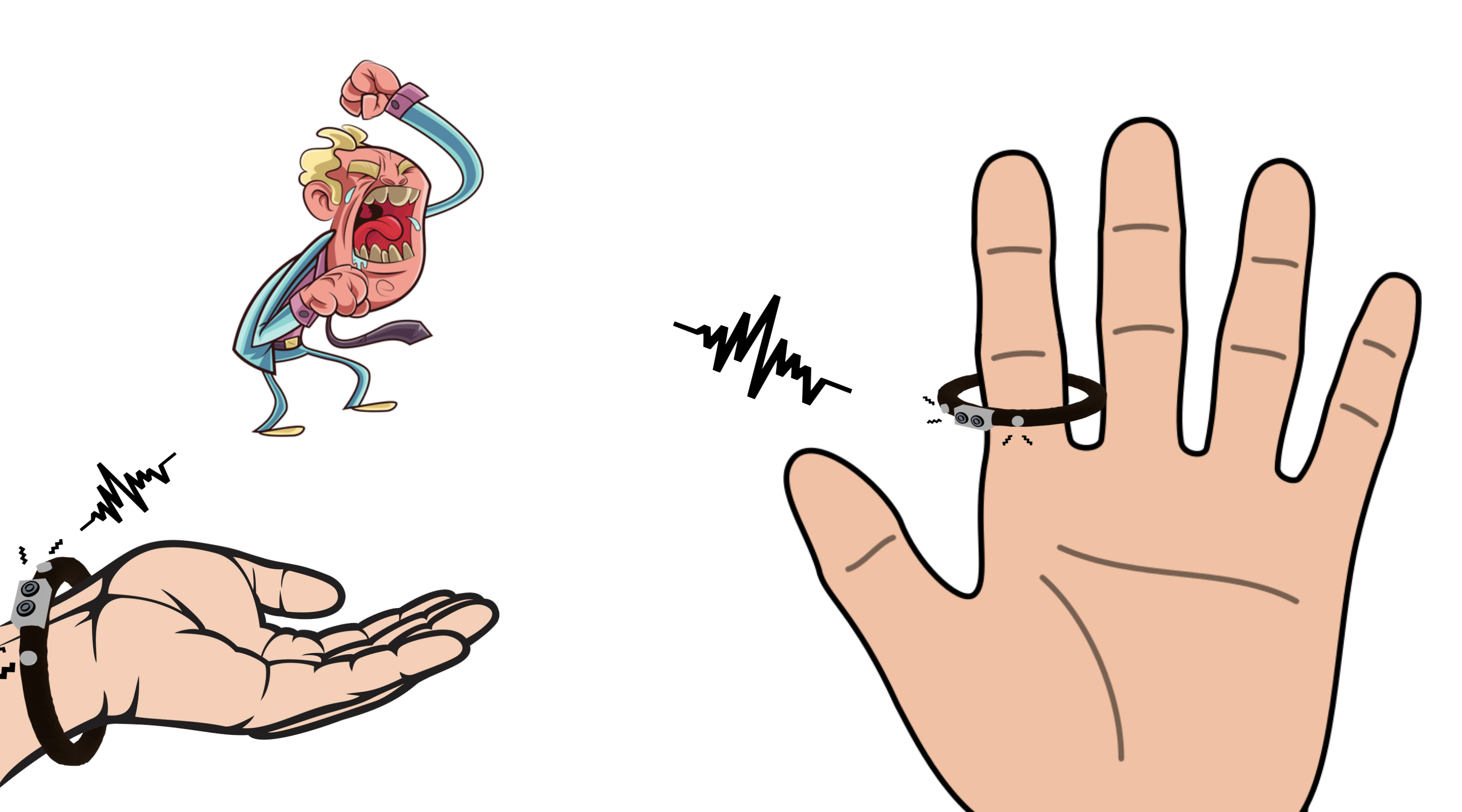

Conversation Interpretation with Haptic Belt

Conversation Interpretation with Haptic Belt

case study 2

![]()

Interpretation with Haptic Ring & Bracelet

Interpretation with Haptic Ring & Bracelet

Our product can be applied:

︎︎︎ When walking on the street

︎︎︎ In social gatherings or events

︎︎︎ When navigating through general daily life

PRODUCT JOURNEY

- Understanding how people with hearing disabilities currently communicate: sign language, lip movement

- Understanding how people who are both blind and deaf engage in conversation: tactile sign language drawn on palm

- How do we apply the haptic solution to multiple conversations? How do we figure out which person’s voice to transcribe at a particular time?

- What if multiple haptic feedback sensors are used on the body? 3D spatial awareness can be achieved, and the user can know where the sound is coming from.

- Understanding Whisper and the limitations of the model

- Does Whisper detect non-speech related sounds? e.g. police sirens, car sounds and crashes (Application: user knowing more about dangers around them)

- Whisper has multilingual training, but can it transcribe in braille?

- How can words be turned into haptics? What patterns can be deployed?

- Morse code

- Mimicking speech intonation

- Research on positive vs. negative patterns

MAKING JOURNEY

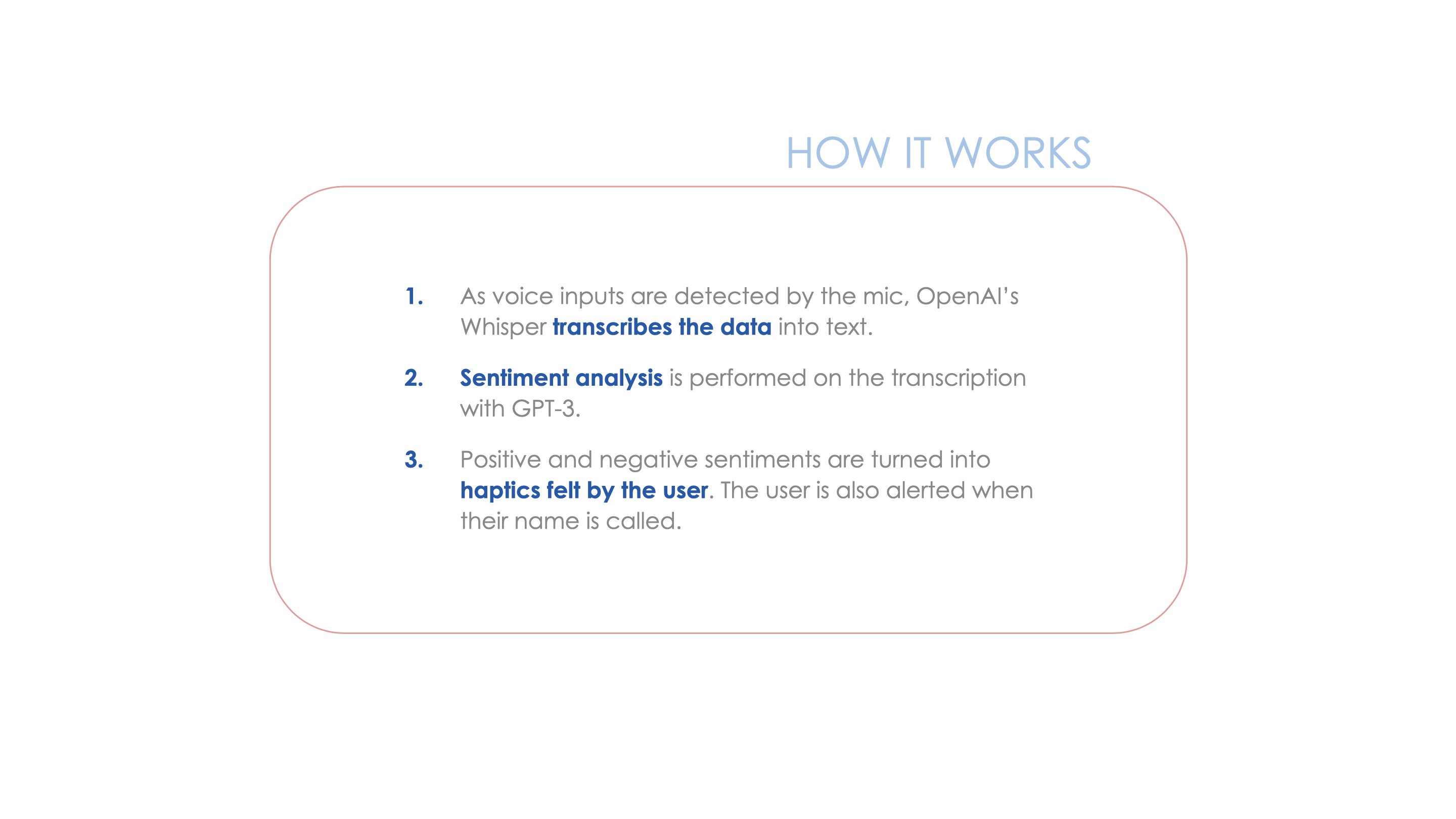

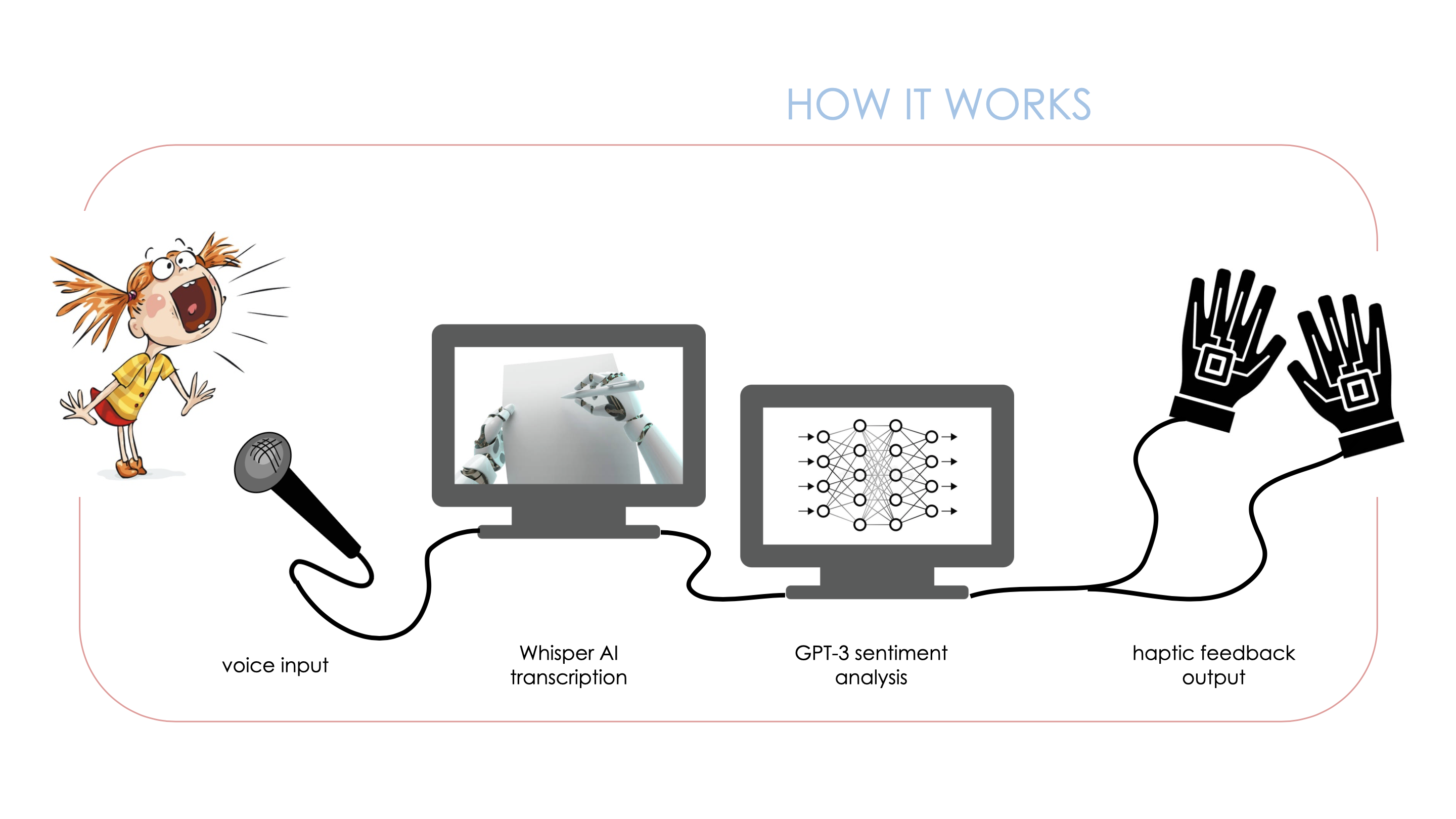

Sensing Danger

- Whisper AI transcribes speech

- GPT-3 performs sentiment analysis to discern whether speech is negative (potential danger)

- Binary signal sent to haptic device that represents positive or negative sentiment

- Vibration motor triggered to give feedback respective to sentiment

Sensing Name Call

- User inputs name into system

- Whisper AI transcribes speech

- Transcription is checked for user’s name

- If user’s name detected, signal sent to haptic device

- Vibration motor triggered to give feedback

PHYSICAL PRODUCT

Haptic Belt and Ring, modeled in Blender

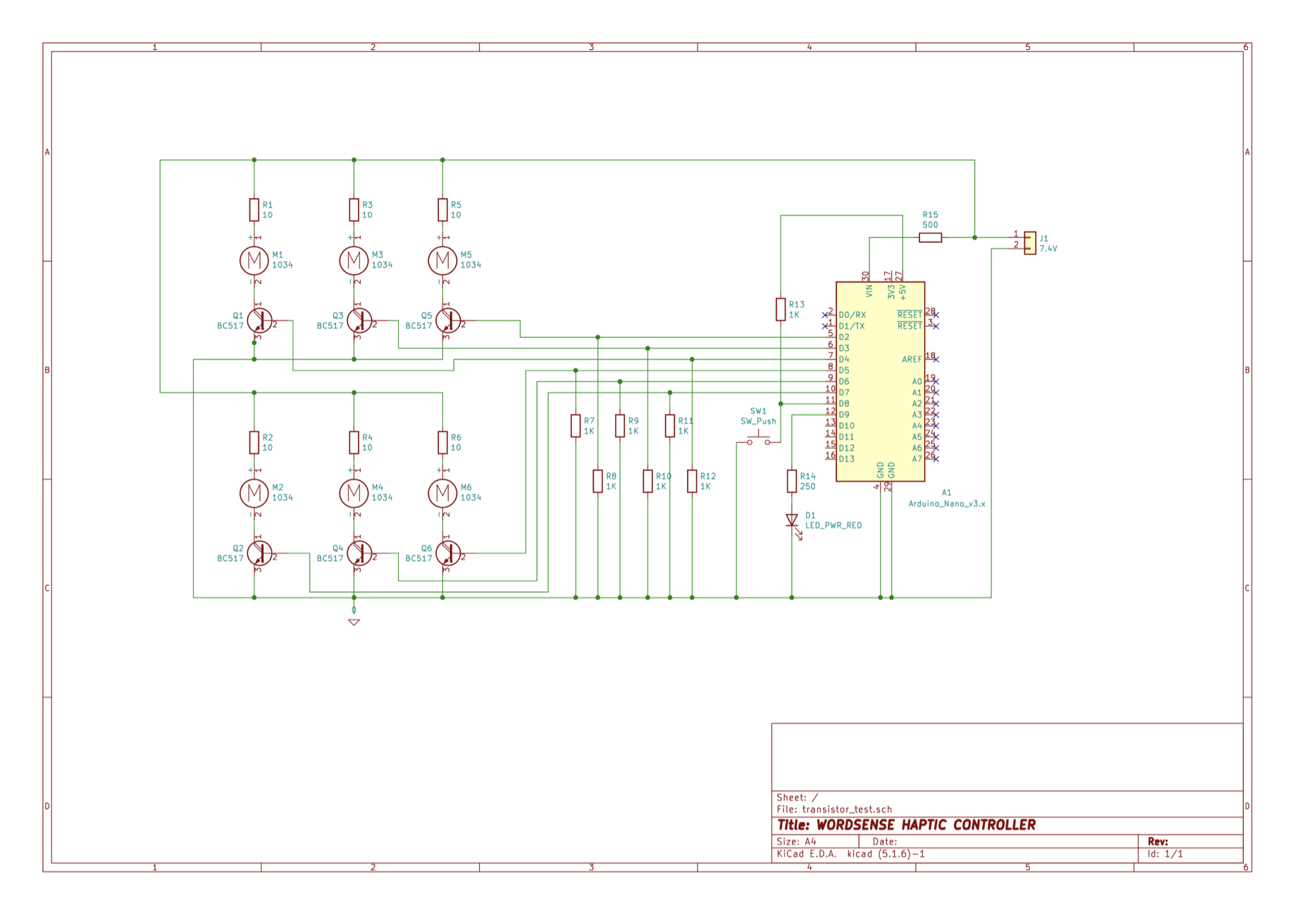

Using the arduino microphone we are able to send the information from the microphone to a microchip storing the logic code we made before

After reading in the binary signal sent from the microchip storing the code logic sets off the vibration or keeps off

WordSense Haptic Controller Circuit Design

FUTURE ITERATIONS

Our hope for the future of our product is to focus on giving people with hearing disabilities the same spatial scope that sound allows. We will implement this by:

- Applying more sensors and haptics on the belt, allowing for more accurate 360° inputs and corresponding haptics

- Testing haptic patterns and their interpretations to more accurately represent certain sentiments and emotions

- Expanding sentiment analysis to cover a broader range of emotions and events, which each sending a unique signal

- Exploring how ASL can be translated into haptic feedback for more autonomy (being able to multitask and have conversations without having to face a person)

For a person with hearing disabilities, WordSense solves the problems of not being able to passively interpret conversations around you, having to face the person to read lip movement or sign language, not being able to multitask, and having tunnel vision due to the lack of sound as an indicator. WordSense eases the daily lives of people facing hearing disabilities, and provides them with the power of autonomy.

WordSense is an OpenAI Whisper Hackathon project that was developed from Oct 14 to Oct 16 2022. It involves applying OpenAI models like Whisper and GPT-3 in the field of disability.

In Collaboration With:

Karthik Kaiplody

Fahad Shihab

Merik Lalic-Shields

Karthik Kaiplody

Fahad Shihab

Merik Lalic-Shields